?

Pataphysica D

Nonlinear twists of the imagination.

Saturday, June 17, 2017

Friday, December 2, 2016

10 Books You Should Read ASAP

(Or six important books that will make you realize that everything you thought you knew is wrong, as they say in other clickbaits.)

First published in 1944, Horkheimer & Adorno's The Dialectic of Enlightenment still echoes in debates about rationality and a scientific progress aiming at control over nature while it also alienates us from it. Adorno and Horkheimer's witty and erudite analyses about the entertainment industry, or totalitarian regimes and the naïve press and pundits who cannot foresee their rise to power, remain insightful today.

Humanity with its powerful brain capacity is not a deviation from the natural history, they write; with our machines, chemicals and organizational powers extending our physiology we are able to promote our own species at the cost of eradicating other species. In the bigger perspective, ideas about freedom and justice, religions and political persuasions are relevant only insofar as they increase or diminish our species' prospects of survival on Earth or in the universe.

As Adorno and Horkheimer presciently write:

The Sixth Extinction by Elizabeth Kolbert fills in the details about this on-going destruction. It begins with some history of science from a time when it was not even known or accepted that species could go extinct. Even as fossils of long extinct species were being displayed in curiosity cabinets, Carl von Linné "made no distinction between the living and the dead [species] because, in his view, none was required", Kolbert writes. By the late 18th century, the discovery of the mastodon with its bones and teeth that did not quite match those of any known animal led Cuvier to the idea of an animal still larger than the elephant. If such enormous animals were still around they surely would have been spotted somewhere, but since none had been seen, Cuvier concluded that they must have gone extinct.

Kolbert's book offers many glimpses into the process of establishing scientific theories, as popular science books should try to do. Habitat loss, the introduction of invasive species, climate change and various forms of pollution are some of the threats to the survival of many species. Building roads through rainforests is one way the habitat shrinks by trapping highly specialized species in small regions where they become more vulnerable to forest fires, hunting, diseases or other threats. Species are introduced on purpose, as we have done with some plants, or inadvertently, as with rats and snails. If no local predators feed on the introduced species, they may spread very efficiently and replace indigenous species. There is no single mechanism behind all this biodiversity loss, but there is no doubt that it is closely related to the growth of the human population and our exploitation of natural resources.

Although modern civilization has accelerated the threats to biodiversity,

there are many species (such as flightless birds) that were just too easy to hunt, which went extinct at various times when humans began to colonize the continents. The megafauna that existed reproduced very slowly and had no natural enemies. As prehistoric humans occasionally managed to hunt these animals, their population declined too gradually for anyone to notice, until there were none left.

Kolbert does not moralize, and although it is clear that humans are to blame for the current mass extinction, there are also many touching stories in the book about wildlife conservationists who go to exceptional measures trying to save endangered species. In fact, some species would not exist today were it not for the sustained efforts to keep them alive, as is the case with some frogs that have to be kept in "frog hotels" because they would not survive fungus infections in the wild.

And as the ecosystems break apart, eventually humans too will be at risk. One of the researchers Kolbert met while writing the book speculates that rats will be the most advanced species to remain.

The threat that biodiversity loss poses to humans is one of many complementary perspectives one needs to keep in mind when reading a book such as Nick Bostrom's Superintelligence. Bostrom argues that an artificial intelligence explosion is possible; it would happen when an AI discovers how to improve its own intelligence. At that point it will improve itself at an exponentially increasing rate, attaining an incomprehensible level of intelligence by far surpassing that of the most intelligent humans in the course of days or seconds. Once a superintelligence exists, the further fate of humanity would depend on it.

That part of Bostrom's argument is impeccable, but there are some serious omissions and weak spots in this very detailed book. First, there is not much of a discussion of what constitutes intelligence in humans, and what would qualify as intelligence in a machine. For example, it is not clear whether intelligence must be accompanied by consciousness or not, although Bostrom seems to think so. However, he suggests that a superintelligence is likely to take such an unfamiliar form that we might not even recognize it.

Second, since the superintelligence would develop on computers (on a single computer or a cluster), it would be enlightening to discuss the physical requirements for such an intelligence explosion. There must be physical constraints in terms of material and energy consumption. If the physical computing resources are limited any increase in an AI's capacities must come from software improvements, which must saturate at some point. Given the enormous data centers devoted to scientific number crunching, internet storage or mass surveillance, the computer resources needed for an artificial intelligence takeoff may already be there, waiting to be filled with the appropriate algorithms.

The belief in accelerating scientific and technological progress which Bostrom seems to share is far from the unfettered techno-optimism celebrated by transhumanists, but Bostrom still fails to consider the complexity of the current situation from all the relevant angles.

Although Elon Musk and Stephen Hawking have seconded his concerns for an AI takeover, many have warned about a more immediate cause of concern which is the effects of automation on the labour market. To be fair, Bostrom briefly mentions how the horse population dwindled as automobiles took over the work they performed, noting that a similar course of events could make a large part of the human labour force superfluous. And of course this is already happening. Some of the jobs perhaps could be replaced by more skilled workers, but jobs will be lost faster than they are replaced.

Bostrom is probably right about our inability to grasp the nature of superintelligence and our over-estimation of our intellectual capacities:

A large part of Kahneman's book is devoted to behavioural economics and effects such as loss aversion. Experiments have shown that most people are reluctant to make an investment if there is a certain chance of a net loss even though the expected gain would be positive. We are not always as rational as we would like to believe, but at least we are able to demonstrate our own cognitive impediments through scientific experiments.

Behavioural economics is limited to the personal perspective, that is, to microeconomics. It prides itself on proving neoclassical theories of the rational agent wrong, but has nothing to say about the role of economics at the level of a society or among nations. Piketty shed light on the history of economic inequality during the last two centuries in Le capital au XXIe siècle (discussed elsewhere). In short, the rich get richer for various reasons, notably because at a certain level, the return on capital investment is greater than the economic growth rate. Wealth therefore accumulates among those who own stock or real estate. Technological inventions and automation also tend to increase inequality by increasing demand for well paid high-skilled workers as well as for low-skilled workers while the middle segment gets hollowed out.

Wilkinson & Pickett's The Spirit Dimension demonstrates the effects of economic inequality on societies and on individuals. They show how more equal countries fare better in several respects, including drug abuse, violence, health, literacy, imprisonment and social mobility. The Scandinavian countries and Japan range among the most equal, whereas USA and Great Britain are two of the most unequal of those considered. As a choice of method, Wilkinson & Pickett have restricted their study to developed countries in order to demonstrate the effects of economic equality separately from the general level of wealth in a country. Comparing the American states, they find the same pattern at that level.

Although the point of The Spirit Dimension is to display the negative consequences of inequality on health, social mobility and so on, each of the problem fields the book touches on has its own set of causes. For example, the high imprisonment rate in USA have other causes beside inequality, notably that prisons have become an industry run for profit.

Moreover, although several pieces of evidence point in the same direction―that inequality is causing the adverse effects in all the domains they describe―it would be unwarranted to rule out an opposite direction of causation in some cases, such as high imprisonment rates and illiteracy leading to increasing inequality. The findings of Wilkinson & Pickett have been questioned, but they have posted rebuttals of the points raised by their critics.

One of the most urgent questions now is how to reduce the impact of economic activity on the environment. The viable solution, according to Wilkinson and Pickett, is a steady-state economy which, instead of growing, adapts to changing demands. Such an economy will have its best chances in an equal society where consumerism is obsoleted in favour of community building.

The astute reader will have found that this list of urgent reading does not add up to ten titles. The reason is that the reviewer has yet to read four of those important books.

First published in 1944, Horkheimer & Adorno's The Dialectic of Enlightenment still echoes in debates about rationality and a scientific progress aiming at control over nature while it also alienates us from it. Adorno and Horkheimer's witty and erudite analyses about the entertainment industry, or totalitarian regimes and the naïve press and pundits who cannot foresee their rise to power, remain insightful today.

Humanity with its powerful brain capacity is not a deviation from the natural history, they write; with our machines, chemicals and organizational powers extending our physiology we are able to promote our own species at the cost of eradicating other species. In the bigger perspective, ideas about freedom and justice, religions and political persuasions are relevant only insofar as they increase or diminish our species' prospects of survival on Earth or in the universe.

As Adorno and Horkheimer presciently write:

The human capacity for destruction promises to become so great that―once this species has exhausted itself―a tabula rasa will have been created. Either the human species will tear itself to pieces or it will take all the earth's fauna and flora down with it, and if the earth is still young enough, the whole procedure―to vary a famous dictum―will have to start again on a much lower level.

The Sixth Extinction by Elizabeth Kolbert fills in the details about this on-going destruction. It begins with some history of science from a time when it was not even known or accepted that species could go extinct. Even as fossils of long extinct species were being displayed in curiosity cabinets, Carl von Linné "made no distinction between the living and the dead [species] because, in his view, none was required", Kolbert writes. By the late 18th century, the discovery of the mastodon with its bones and teeth that did not quite match those of any known animal led Cuvier to the idea of an animal still larger than the elephant. If such enormous animals were still around they surely would have been spotted somewhere, but since none had been seen, Cuvier concluded that they must have gone extinct.

Kolbert's book offers many glimpses into the process of establishing scientific theories, as popular science books should try to do. Habitat loss, the introduction of invasive species, climate change and various forms of pollution are some of the threats to the survival of many species. Building roads through rainforests is one way the habitat shrinks by trapping highly specialized species in small regions where they become more vulnerable to forest fires, hunting, diseases or other threats. Species are introduced on purpose, as we have done with some plants, or inadvertently, as with rats and snails. If no local predators feed on the introduced species, they may spread very efficiently and replace indigenous species. There is no single mechanism behind all this biodiversity loss, but there is no doubt that it is closely related to the growth of the human population and our exploitation of natural resources.

Although modern civilization has accelerated the threats to biodiversity,

there are many species (such as flightless birds) that were just too easy to hunt, which went extinct at various times when humans began to colonize the continents. The megafauna that existed reproduced very slowly and had no natural enemies. As prehistoric humans occasionally managed to hunt these animals, their population declined too gradually for anyone to notice, until there were none left.

Kolbert does not moralize, and although it is clear that humans are to blame for the current mass extinction, there are also many touching stories in the book about wildlife conservationists who go to exceptional measures trying to save endangered species. In fact, some species would not exist today were it not for the sustained efforts to keep them alive, as is the case with some frogs that have to be kept in "frog hotels" because they would not survive fungus infections in the wild.

And as the ecosystems break apart, eventually humans too will be at risk. One of the researchers Kolbert met while writing the book speculates that rats will be the most advanced species to remain.

The threat that biodiversity loss poses to humans is one of many complementary perspectives one needs to keep in mind when reading a book such as Nick Bostrom's Superintelligence. Bostrom argues that an artificial intelligence explosion is possible; it would happen when an AI discovers how to improve its own intelligence. At that point it will improve itself at an exponentially increasing rate, attaining an incomprehensible level of intelligence by far surpassing that of the most intelligent humans in the course of days or seconds. Once a superintelligence exists, the further fate of humanity would depend on it.

That part of Bostrom's argument is impeccable, but there are some serious omissions and weak spots in this very detailed book. First, there is not much of a discussion of what constitutes intelligence in humans, and what would qualify as intelligence in a machine. For example, it is not clear whether intelligence must be accompanied by consciousness or not, although Bostrom seems to think so. However, he suggests that a superintelligence is likely to take such an unfamiliar form that we might not even recognize it.

Second, since the superintelligence would develop on computers (on a single computer or a cluster), it would be enlightening to discuss the physical requirements for such an intelligence explosion. There must be physical constraints in terms of material and energy consumption. If the physical computing resources are limited any increase in an AI's capacities must come from software improvements, which must saturate at some point. Given the enormous data centers devoted to scientific number crunching, internet storage or mass surveillance, the computer resources needed for an artificial intelligence takeoff may already be there, waiting to be filled with the appropriate algorithms.

The belief in accelerating scientific and technological progress which Bostrom seems to share is far from the unfettered techno-optimism celebrated by transhumanists, but Bostrom still fails to consider the complexity of the current situation from all the relevant angles.

Although Elon Musk and Stephen Hawking have seconded his concerns for an AI takeover, many have warned about a more immediate cause of concern which is the effects of automation on the labour market. To be fair, Bostrom briefly mentions how the horse population dwindled as automobiles took over the work they performed, noting that a similar course of events could make a large part of the human labour force superfluous. And of course this is already happening. Some of the jobs perhaps could be replaced by more skilled workers, but jobs will be lost faster than they are replaced.

Bostrom is probably right about our inability to grasp the nature of superintelligence and our over-estimation of our intellectual capacities:

Far from being the smartest possible biological species, we are probably better thought of as the stupidest possible biological species capable of starting a technological civilization―a niche we filled because we got there first, not because we are in any sense optimally adapted to it.But with some effort, we are able to discover our cognitive biases, many of which are discussed in Daniel Kahneman's Thinking, Fast and Slow. According to Kahneman and some other psychologists we have two cognitive systems. One is fast and intuitive. Relying on intuition and stereotypes it usually gets things right, but it checks no facts and does not stop to think critically, so occasionally it gets things really wrong. The slow system is used for hard problems, such as the mental multiplication of 18x23, checking the validity of a logical argument, or anything that demands prolonged attention.

A large part of Kahneman's book is devoted to behavioural economics and effects such as loss aversion. Experiments have shown that most people are reluctant to make an investment if there is a certain chance of a net loss even though the expected gain would be positive. We are not always as rational as we would like to believe, but at least we are able to demonstrate our own cognitive impediments through scientific experiments.

Behavioural economics is limited to the personal perspective, that is, to microeconomics. It prides itself on proving neoclassical theories of the rational agent wrong, but has nothing to say about the role of economics at the level of a society or among nations. Piketty shed light on the history of economic inequality during the last two centuries in Le capital au XXIe siècle (discussed elsewhere). In short, the rich get richer for various reasons, notably because at a certain level, the return on capital investment is greater than the economic growth rate. Wealth therefore accumulates among those who own stock or real estate. Technological inventions and automation also tend to increase inequality by increasing demand for well paid high-skilled workers as well as for low-skilled workers while the middle segment gets hollowed out.

Wilkinson & Pickett's The Spirit Dimension demonstrates the effects of economic inequality on societies and on individuals. They show how more equal countries fare better in several respects, including drug abuse, violence, health, literacy, imprisonment and social mobility. The Scandinavian countries and Japan range among the most equal, whereas USA and Great Britain are two of the most unequal of those considered. As a choice of method, Wilkinson & Pickett have restricted their study to developed countries in order to demonstrate the effects of economic equality separately from the general level of wealth in a country. Comparing the American states, they find the same pattern at that level.

Although the point of The Spirit Dimension is to display the negative consequences of inequality on health, social mobility and so on, each of the problem fields the book touches on has its own set of causes. For example, the high imprisonment rate in USA have other causes beside inequality, notably that prisons have become an industry run for profit.

Moreover, although several pieces of evidence point in the same direction―that inequality is causing the adverse effects in all the domains they describe―it would be unwarranted to rule out an opposite direction of causation in some cases, such as high imprisonment rates and illiteracy leading to increasing inequality. The findings of Wilkinson & Pickett have been questioned, but they have posted rebuttals of the points raised by their critics.

One of the most urgent questions now is how to reduce the impact of economic activity on the environment. The viable solution, according to Wilkinson and Pickett, is a steady-state economy which, instead of growing, adapts to changing demands. Such an economy will have its best chances in an equal society where consumerism is obsoleted in favour of community building.

The astute reader will have found that this list of urgent reading does not add up to ten titles. The reason is that the reviewer has yet to read four of those important books.

Saturday, June 4, 2016

The joy of frequency shifting

Frequency shifting, or single sideband (SSB) modulation as it is also called, differs from the more well known ring modulation in that it produces less partials in the resulting spectrum. Whereas ring modulation is the same as the convolution of the carrier's spectrum with the input signal's spectrum, single sideband modulation just shifts the partials up or down in frequency. Harmonic tones become inharmonic, unless the amount of shift happens to equal the frequency of one of the harmonics.

SSB modulation can be carried out with analog as well as digital techniques. There has been much prejudice and heated debate about the advantages of analog vs. digital. In this comparison between an analog eurorack module and several DSP implementations of SSB, one can hear differences in their sound, but that is not to say that everyone will agree on what sounds better or which method would be preferred in a given situation.

SSB modulation can be carried out with analog as well as digital techniques. There has been much prejudice and heated debate about the advantages of analog vs. digital. In this comparison between an analog eurorack module and several DSP implementations of SSB, one can hear differences in their sound, but that is not to say that everyone will agree on what sounds better or which method would be preferred in a given situation.

Friday, December 11, 2015

On Modular Purism and Sozialrealismus

Among practitioners of modular synthesis, there is today a widespread reverence for the pure modular, where the modular shines on its own as the single sound source, preferably recorded in one take and with minimal post-processing. In improvised music this kind of purism may make sense, but modulars are often used in other ways, e.g. driven by sequencers or set up as a self-generating system more or less nudged in the right direction by the modularist.

Playing live in a concert, overdubs or edits are obviously not a part of the game. Perhaps that spontaneous flow of live performance is taken as the ideal form that even home studio recordings should mimic.

This ideal of purity often serves as an excuse for acquiring a voluminous modular system. Even more so if one wants to achieve full polyphony. However, there is so much to gain from multi-tracking and editing that it is a wonder why anyone with a modular should refuse to deal with that part.

The pieces on the album SOZIALREALISMUS are collages and juxtapositions of a variety of sources. All pieces are centered around recordings of a eurorack modular, often accompanied by field recordings.

In some pieces the electronic sounds were played through loudspeakers placed on the resonating bodies of acoustic instruments and then recorded again. Recordings have been cut up and spliced together in new constellations.

Although the blinking lights and the mess of patch cords of a modular projects an image of complicated machinery leading its autonomous electric life, another aesthetic is possible even in the realm of modular music, an aesthetic of handicraft, allowing the imperfections of improvisation. The included drawings that come with the digital album and the linoleum print cover on the cassette hint in that direction.

|

| Linoleum print © Holopainen. Makes for an interesting stereo image as well. |

There has always been a focus on technique and gear in the electronic music community. When modulars are becoming more common, their ability to provoke curiosity may dwindle if the music made with them fails to convince the listeners.

Wednesday, August 12, 2015

Golomb Rulers and Ugly Music

A Golomb ruler has marks on it for measuring distances, but unlike ordinary rulers it has a smaller number of irregularly spaced marks that still allow for measuring a large number of distances. The marks are at integer multiples of some arbitrary unit. A regular ruler of six units length will have marks at 0, 1, 2, 3, 4, 5 and 6, and it will be possible to measure each distance from 1 to 6 units. A Golomb ruler of length six could have marks at 0, 1, 4 and 6.

Each distance from 1 to 6 can be found between pairs of marks on this ruler. A Golomb ruler that has this nice property that each distance from 1 to the length of the ruler can be measured with it is called a perfect Golomb ruler. Unfortunately, there is a theorem that states that there are no perfect Golomb rulers with more than four marks.

Each distance from 1 to 6 can be found between pairs of marks on this ruler. A Golomb ruler that has this nice property that each distance from 1 to the length of the ruler can be measured with it is called a perfect Golomb ruler. Unfortunately, there is a theorem that states that there are no perfect Golomb rulers with more than four marks.

Sidon sets are subsets of the natural numbers {1, 2, ..., n} such that the sums of any pair of the numbers in the set are all different. It turns out that Sidon sets are equivalent to Golomb rulers. The proof must have been one of the lowest hanging fruits ever of mathematics.

An interesting property of Golomb rulers is that, in a sense, they are maximally irregular. Toussaint used them to test a theory of rhythmic complexity precisely because of their irregularity, which is something that sets them apart from more commonly encountered musical rhythms.

There is a two-dimensional counterpart to Golomb rulers which was used to compose a piano piece that, allegedly, contains no repetition and is therefore the ugliest kind of music its creator could think of.

Contrary to what Scott Rickard says in this video, there are musical patterns in this piece. Evidently they did not consider octave equivalence, so there is a striking passage of ascending octaves and hence pitch class repetition.

At first hearing, the "ugly" piece may sound like a typical 1950's serialist piece, but it has some characteristic features such as its sequence of single notes and its sempre forte articulation. Successful serialist pieces would be much more varied in texture.

The (claimed) absence of patterns in the piece is more extreme than would be a random sequence of notes. If notes had been drawn randomly from a uniform distribution, there is some probability of immediate repetition of notes as well as of repeated sequences of intervals. When someone tries to improvise a sequence of random numbers, say, just the numbers 0, 1, they would typically exaggerate the occurrences of changes and generate too little repetition. True randomness is more orderly than our human conception of it. In that sense the "ugly" piece agrees with our idea of randomness more than would an actually random sequence of notes.

When using Golomb rulers for rhythm generation, it may be practical to repeat the pattern instead of extending a Golomb ruler to the length of the entire piece. In the case of repetition the pattern occurs cyclically, so the definition of the ruler should change accordingly. Now we have a circular Golomb ruler (perhaps better known as a cyclic difference set) where the marks are put on a circle, and distances are measured along the circumference of the circle.

Although the concept of a Golomb ruler is easy for anyone to grasp, some generalization and a little further digging leads into the frontiers of mathematic knowledge with unanswered questions still to solve.

And, of course, the Golomb rulers make excellent raw material for quirky music.

Monday, March 30, 2015

Formulating a Feature Extractor Feedback System as an Ordinary Differential Equation

The basic idea of a Feature Extractor Feedback System (FEFS) is to have an audio signal generator whose output is analysed with some feature extractor, and this time varying feature is mapped to control parameters of the signal generator in a feedback loop.

What would be the simplest possible FEFS that still is capable of a wide range of sounds? Any FEFS must have the three components: a generator, a feature extractor and a mapping from signal descriptors to synthesis parameters. As for the simplicity of a model, one way to assess it would be to formulate it as a dynamic system and count its dimension, i.e. the number of state variables.

Although FEFS were originally explored as discrete time systems, some variants can be designed using ordinary differential equations. The generators are simply some type of oscillator, but it may be less straightforward to implement the feature extractor in terms of ordinary differential equations. However, the feature extractor (also called signal descriptor) does not have to be very complicated.

One of the simplest possible signal descriptors is an envelope follower that measures the sound's amplitude as it changes over time. An envelope follower can be easily constructed using differential equations. The idea is simply to appy a lowpass filter (as described in a previous post) to the squared input signal.

For the signal generator, let us consider a sinusoidal oscillator with variable amplitude and frequency. Although a single oscillator could be used for a FEFS, here we will consider a system of N circularly connected oscillators.

The amplitude follower introduces slow changes to the oscillator's control parameters. Since the amplitude follower changes smoothly, the synthesis parameters will follow the same slow, smooth rhythm. In this system, we will use a discontinuous mapping from the measured amplitudes of each oscillator to their amplitudes and frequencies. To this end, the mapping will be based on the relative measured amplitudes of pairs of adjacent oscillators (remember, the oscillators are positioned on a circle).

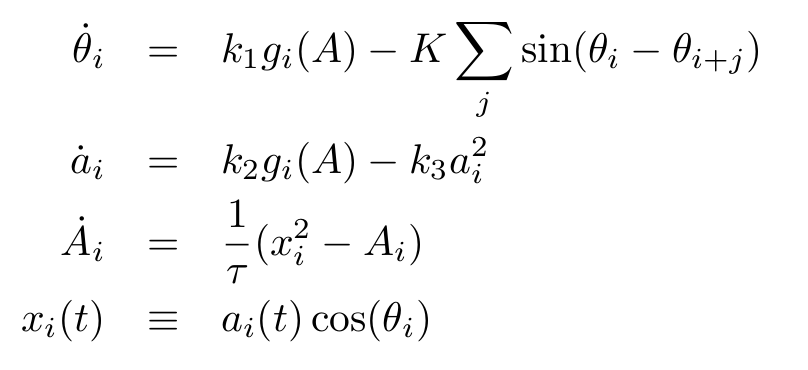

Let g(A) be the mapping function. The full system is

with control parameters k1, k2, k1, K and τ. The variables θ are the oscillators' phases, a are the amplitude control parameters, A is the output of the envelope follower, and x(t) is the output signal. Since x(t) is an N-dimensional vector, any mixture of the N signals can be used as output.

Let the mapping function be defined as

where U is Heaviside's step function and bj is a set of coefficients. Whenever the amplitude of an oscillator grows past the amplitude of its neighboring oscillators, the value of the functions g changes, but as long as the relative amplitudes stay within the same order relation, g remains constant. Thus, with a sufficiently slow amplitude envelope follower, g should remain constant for relatively long periods before switching to a new state. In the first equation which governs the oscillators' phases, the g functions determine the frequencies together with a coupling between oscillators. This coupling term is the same as is used in the Kuramoto model, but here it is usually restricted to two other oscillators. The amplitude a grows at a speed determined by g but is kept in check by the quadratic damping term.

Although this model has many parameters to tweak, some general observations can be made. The system is designed to facilitate a kind of instability, where the discontinuous function g may tip the system over in a new state even after it may appear to have settled on some steady state. Note that there is a finite number of possible values for the function g: since U(x) is either 0 or 1, the number of distinct states is at most 2N for N oscillators. (The system's dimension is 3N; the x variable in the last equation is really just a notational convenience.)

There may be periods of rapid alteration between two states of g. There may also be periodic patterns that cycle through more than two states. Over longer time spans the system is likely to go through a number of different patterns, including dwelling a long time in one state.

Let S be the total number of states visited by the system, given its parameter values and specific initial conditions. Then S/2N is the relative number of visited states. It can be conjectured that the relative number of states visited should decrease as the system's dimension increases. Or does it just take much longer time for the system to explore all of the available states as N grows?

The coupling term may induce synchronisation between the oscillators, but on the other hand it may also make the system's behaviour more complex. Without coupling, each oscillator would only be able to run at a discrete set of frequencies as determined by the mapping function. But with a non-zero couping, the instantaneous frequencies will be pushed up or down depending on the phases of the linked oscillators. The coupling term is an example of the seemingly trivial fact that adding structural complexity to the model increases its behavioural complexity.

There are many papers on coupled systems of oscillators such as the Kuramoto model, but typically the oscillators interact through their phase variables. In the above model, the interaction is mediated through a function of the waveform, as well as directly between the phases through the coupling term. Therefore the choice of waveform should influence the dynamics, which indeed has been found to be the case.

With all the free choices of parameters, of the b coefficients, the waveform and the coupling topology, this model allows for a large set of concrete instantiations. It is not the simplest conceivable example of a FEFS, but still its full description fits in a few equations and coefficients, while it is capable of seemingly unpredictable behaviour over very long time spans.

With all the free choices of parameters, of the b coefficients, the waveform and the coupling topology, this model allows for a large set of concrete instantiations. It is not the simplest conceivable example of a FEFS, but still its full description fits in a few equations and coefficients, while it is capable of seemingly unpredictable behaviour over very long time spans.

Friday, February 20, 2015

Capital in the 21st century: book review

Thomas Piketty:

Le capital au XXIe siècle. Seuil 2013

Economic inequality concerns everyone, we all have an opinion about what is fair. On the other hand, equity has not been a primary concern for most economists in recent times, at least not until the publication of Piketty's work. This review is based on the original French edition, although the book has already been translated to several languages.

Piketty's approach is historical, beginning with an overview of capital and revenues in the rich countries from the 18th century to the present. While it turns out to be true that inequalities have increased since the mid 20th century, it is perhaps surprising to learn that there were once even greater inequalities, peaking in the decades before the first world war. Social mobility was low and the best way to ensure a comfortable living standard for someone who was not born into a wealthy family was to marry rich rather than try to get a well paid job. When the gaps between the rich and the poor shrank in the 20th century, at least until the 1970s, Piketty argues that this happened mainly as a consequence of the two world wars. Governments appropriated private capital and introduced heavy marginal taxes that gradually led to a redistribution of wealth and the emergence of a middle class.

Le capital is written for a broad audience with no prior knowledge of economic theory. Central concepts are clearly explained in an accessible way. Mathematical formulas beyond plain arithmetic are avoided, even when their use could have simplified the exposition. However, Piketty stresses that economics is not the exact science it is often believed to be, and he is rightly sceptical about the exaggerated use of fancy mathematical theories with little grounding in empirical facts.

Although Piketty tries to avoid the pitfalls of speculative theories, he still makes some unrealistic thought experiments, not least concerning population growth and its bearings on economic growth. Population growth has two important effects: to increase economic growth and to contribute to a gradual diffusion of wealth. When each person on average has more than one child who inherits equal parts of their parents' patrimony, the wealth gradually becomes more equally distributed. Now, it does not require much imagination to realise that population growth cannot go on for much longer on this finite planet, and large scale space migration is not a likely option any time soon.

Piketty is at his best in polemics against other economists or explaining methodological issues such as how to compare purchasing power across the decades. Some products become cheaper to produce, so being able to buy more of them does not necessarily imply that the purchasing power has risen. Entirely new kinds of products such as computers or mobile phones enter the market, which also makes direct comparisons complicated.

Human capital is often supposed to be one of the most valuable resources there are, but Piketty seems uneasy about the whole concept. Indeed, when discussing the American economy in the 19th century, a conspicuous form of human capital enters the balance sheets in the form of slaves with their market value. The wealth in the Southern states of America before abolition looks very different depending on whether you think it is possible to own other human beings or not.

Top incomes in the United States have seen a spectacular rise in the last decades. An argument often advanced in favour of exceptionally high salaries is that productive people should be rewarded in proportion to their merits. The concept of marginal productivity describes the increase in productivity as a job is done by a person with better qualifications. However, there is no reliable way to measure the marginal productivity, at least not among top leaders. In practice, as Piketty argues, there is a tendency to "pay for luck" and not for merit as such; if the company happens to experience success, then its CEO can be compensated. The modern society's meritocracy, Piketty writes, is more unfair to its losers than the Victorian society where nobody pretended that the economic differences were deserved. At that time, a wealthy minority earned 30-50 times as much as the average income from revenues of their capital alone.

Piketty's approach is historical, beginning with an overview of capital and revenues in the rich countries from the 18th century to the present. While it turns out to be true that inequalities have increased since the mid 20th century, it is perhaps surprising to learn that there were once even greater inequalities, peaking in the decades before the first world war. Social mobility was low and the best way to ensure a comfortable living standard for someone who was not born into a wealthy family was to marry rich rather than try to get a well paid job. When the gaps between the rich and the poor shrank in the 20th century, at least until the 1970s, Piketty argues that this happened mainly as a consequence of the two world wars. Governments appropriated private capital and introduced heavy marginal taxes that gradually led to a redistribution of wealth and the emergence of a middle class.

Le capital is written for a broad audience with no prior knowledge of economic theory. Central concepts are clearly explained in an accessible way. Mathematical formulas beyond plain arithmetic are avoided, even when their use could have simplified the exposition. However, Piketty stresses that economics is not the exact science it is often believed to be, and he is rightly sceptical about the exaggerated use of fancy mathematical theories with little grounding in empirical facts.

Although Piketty tries to avoid the pitfalls of speculative theories, he still makes some unrealistic thought experiments, not least concerning population growth and its bearings on economic growth. Population growth has two important effects: to increase economic growth and to contribute to a gradual diffusion of wealth. When each person on average has more than one child who inherits equal parts of their parents' patrimony, the wealth gradually becomes more equally distributed. Now, it does not require much imagination to realise that population growth cannot go on for much longer on this finite planet, and large scale space migration is not a likely option any time soon.

Piketty is at his best in polemics against other economists or explaining methodological issues such as how to compare purchasing power across the decades. Some products become cheaper to produce, so being able to buy more of them does not necessarily imply that the purchasing power has risen. Entirely new kinds of products such as computers or mobile phones enter the market, which also makes direct comparisons complicated.

Human capital is often supposed to be one of the most valuable resources there are, but Piketty seems uneasy about the whole concept. Indeed, when discussing the American economy in the 19th century, a conspicuous form of human capital enters the balance sheets in the form of slaves with their market value. The wealth in the Southern states of America before abolition looks very different depending on whether you think it is possible to own other human beings or not.

Top incomes in the United States have seen a spectacular rise in the last decades. An argument often advanced in favour of exceptionally high salaries is that productive people should be rewarded in proportion to their merits. The concept of marginal productivity describes the increase in productivity as a job is done by a person with better qualifications. However, there is no reliable way to measure the marginal productivity, at least not among top leaders. In practice, as Piketty argues, there is a tendency to "pay for luck" and not for merit as such; if the company happens to experience success, then its CEO can be compensated. The modern society's meritocracy, Piketty writes, is more unfair to its losers than the Victorian society where nobody pretended that the economic differences were deserved. At that time, a wealthy minority earned 30-50 times as much as the average income from revenues of their capital alone.

Cette vision de l'inégalité a au moins le mérite de ne pas se décrire comme méritocratique. On choisit d'une certaine façon une minorité pour vivre au nom de tous les autres, mais personne ne cherche à prétendre que cette minorité est plus méritante ou plus vertueuse que le reste de la population. [...] La société méritocratique moderne [...] est beaucoup plus dure pour les perdants (pp. 661-2).The level of marginal taxes and progressive taxation appear to be decisive for top incomes. Lowering taxes actually makes it easier for top leaders to argue in favour of increased salaries, whereas high marginal taxes mean that most of the increase will be lost in taxation anyway. On the other hand, there is the problem of tax havens or fiscal competition between countries that is not easily solved. In addition to that, lobbyists spend quite some money on trying to convince politicians to keep taxes low, as a new study from Oxfam has shown.

The relative proportions of capital and revenue in the rich countries as it varies over time is studied in detail. The amount of capital is usually equivalent of a few years of revenue. However, the curve of capital over time as measured in years of revenue is found to be U-shaped, with a dip during the two world wars. This relative balance of capital and revenue actually sheds light on the structure of wealth distribution. Most capital is private and consists of real estate and stocks. There are mechanisms that make capital grow, effortlessly as it seems: "L'argent tend parfois à se reproduire tout seul."

Here the interesting principle of capital-dependent growth comes to play. As it happens, the more capital you have to begin with, the faster you can make it grow. This is the case even in bank accounts where the interest rate on savings is usually higher above a certain threshold. However, those interest rates are usually not higher than barely to compensate for the effects of inflation, and for small amounts of savings they are usually lower. On the other hand, capital in the form of real estate or stocks may grow faster than wages. One reason for the level-dependent growth of wealth is that it is easier to take financial risks and to be patient and await the right moment with a larger initial amount of capital, but the most important explanation, according to Piketty, is that the richest investors have more options to engage expert advisors when making their placements so they can seek out less obvious investments with high capital revenues.

Inheritance, rather than well paid work, was the way to wealth in the 19th century. To what extent are we about to return to that economic structure today? Indeed, there are worrisome indications that today's society risks a return of the rentiers, or persons of private means. Piketty's solution to the wealth distribution problem is, roughly, to increase the transparency of banks and to impose a progressive tax on capital in addition to revenue taxation.

Shortcomings

Capital has become a bestseller and has already had a significant impact on the public debate (e.g. a recent report from citi GPS on the future of innovation and employment). Piketty leaves the old quarrels between communism and free market capitalism behind and proposes solutions based on evidence rather than wishful thinking or elegant theories with little grounding in reality. However, the last word in this debate has not been said. Free market proponents will have their familiar criticism, but it seems more appropriate to point out the shortcomings and the limited perspective of Piketty's account from an entirely different point of view. This is still some flavour of classical economic theory that does not seriously consider the role of energy consumption for the economy, nor is it sufficiently concerned with the finite resources of minerals including fossil fuels that are being depleted or the problems of pollution, global warming and ecological collapse that will soon have tremendous impacts on the economy. Piketty is probably fully aware of these problems and yet, with the exception of a brief mention of the Stern Review he neglects to make them a part of the narrative which therefore becomes one-sided. Perhaps this is an unfair criticism since the aim of the book is to demonstrate the mechanisms driving the increasing inequality in a historical perspective, but nonetheless important facets are missing.Although no background knowledge of economics is assumed and many concepts are lucidly explained to the general audience, the book is not trying to be an introductory text book on economic theory. Fortunately there are many resources to read up on the basic mechanisms of economy that include the environment and energy resources as part of the equation. Gail Tverberg's introduction is a good place to start. Many interesting articles appear at resilience.org. Ugo Bardi's blog resource crisis is worth a visit for further reading. The interwoven problems of debt, peak oil and climate change have been discussed at length by Richard Heinberg, and also in a previous post here. The new keyword that will shape our understanding of the economic system is degrowth.

Wednesday, January 7, 2015

Decimals of π in 10TET

The digits of π have been translated into music a number of times. Sometimes the digits are translated to the pitches of a diatonic scale, perhaps accompanied by chords. The random appearance of the sequence of digits is reflected in the aimless meandering of such melodies. But wouldn't it be more appropriate, in some sense, to represent π in base 12 and map the numbers to the chromatic scale? After all, there is nothing in π that indicates that it should be sung or played in a major or minor tonality. Of course the mapping of integers to the twelve chromatic pitches is just about as arbitrary as any other mapping, it is a decision one has to take. However, it is easier to use the usual base 10 representation and to map it to a 10-TET tuning with 10 chromatic pitch steps in one octave.

Here is an etude that does precisely that, with two voices in tempo relation 1 : π. The sounds are synthesized with algorithms that also incorporate the number π. In the fast voice, the sounds are made with FM synthesis where two modulators with ratio 1 : π modulate a carrier. The slow voice is a waveshaped mixture of three partials in ratio 1 : π : π2.

Despite the random appearance of the digits of π, it is not even known whether π is a normal number or not. Let us recall the definition: a normal number in base b has an equal proportion of all the digits 0, 1, ..., b-1 occuring in it, and equal probability of any possible sequence of two digits, three digits and so on. ("Digit" is usually reserved for the base 10 number system, so you may prefer to call them "letters" or "symbols".) A number that is normal in any base is simply called normal.

Some specific normal numbers have been constructed, but even though it is known that almost all numbers are normal, the proof that a number is normal is often elusive. Rational numbers are not normal in any base since they all end in a periodic sequence, such as 22/7 = 3.142857. However, there are irrational, non-normal numbers, some of which are quite exotic in the way they are constructed.

Tuesday, December 16, 2014

Global warming, peak oil, collapse and the art of forecasting

Predicting the future usually means to provide an accurate analysis of the present and extrapolating some trends. Therefore the forecasts that try to look at least some decades into the future need to be well informed of the current situation. However, we have various options; we may make different decisions that will influence the outcome. For climate change, the amount of greenhouse gases emitted will be a major factor that determines the amount of warming, and this is obviously the topic for negotiations.

The IPCC's fifth report from November 1 2014 comes with its usual summary for policymakers. However, having a policy is not restricted to the assumed readers of the report; we are all policymakers. IPCC's report sketches the facts (the melting ice, acidifying of oceans etc) and suggest mitigation strategies and adaptation to the inevitable worsening climate in many parts of the world. Perhaps the physics behind global warming is assumed to be known, but possible positive feedback mechanisms are worth mentioning. This is how the summary alludes to that:

As usual, the so called policy makers are reluctant of taking consorted action. From the debate it may appear as though the goal of limiting global warming to 2°C would be sufficient to keep up business as usual. Oil companies and their allied business partners either invest in campaigns casting doubt on climate science, or argue that their production is so much cleaner than in the rest of the world, and if they didn't drill for oil, then someone else would. Yet, we know that most of the known hydrocarbon sources will have to remain in the ground to minimize the impact of climate change. Even with one of the most optimistic scenarios, we would have to prepare for extreme weather; draughts, hurricanes, forest fires, flooding, and mass extinction of species. Perhaps there is an awareness of the unwillingness to take prompt measures to reduce greenhouse gas emissions that has tipped the IPCC's focus somewhat over on adaptation to shifting climate conditions rather than focusing narrowly on mitigation strategies.

Heinberg discusses three issues which combined seem to have very dramatic consequences for the civilised world as we know it: First, there is the energy issue and peak oil. It appears unlikely that renewable energy sources and nuclear power will be able to replace fossil fuel at the pace that would be needed for continuing economic growth. Second, there is debt as the driver of economic growth. As an illustration, Heinberg mentions the early automobile industry with its efficient, oil-powered assembly line production, so efficient in fact that it led to over-production. The next problem became how to sell cars to customers, because these were more expensive than people could generally afford. Hence came the invention of advertising, of "talking people into wanting things they didn't need" and subsidiary strategies such as planned obsolescence; making products that broke down and had to be replaced, or the constant redesign and changing fashion so that consumers would want to have the latest model. The solution to the cars being too expensive was to offer consumers credit. Money is created every time someone gets a loan from the bank with the trust that it will be paid back in the future, which again necessitates economic growth. This system has sometimes been likened to a pyramid game, and it is not a very radical idea to believe that it could collapse at some point. The third issue that Heinberg brings up is the climate change, which will lead to global disruption as large parts of the world become uninhabitable.

Two of Heinberg's recent essays deal with the energy situation and the coming transition to a society with a very different pattern of energy consumption. His criticizes the excessive optimism on behalf of renewable energy on one hand and "collapsitarians" on the other, some of which even think we are bound for extinction. The conclusion is that energy use and consumption in general must be reduced, either voluntarily or as a matter of necessity when there are no longer any alternatives.

An even more vivid account of more or less the same story is given by Michael C. Ruppert in the documentary Collapse. And after the realisation that this is probably where we are heading, it may be best to take the advice of Dmitry Orlov who has some experience of living in a collapsed Soviet Union: just stay calm, be prepared to fix anything that breaks down yourself and don't expect any more holiday trips to the other side of the planet!

The Intergovernmental Panel on Climate Change (IPCC)

The IPCC thus operates with four "representative concentration pathways" (RCPs) of CO2 equivalents in the atmosphere and what effects that will have on the climate. The pathways stand for different strategies of coping with the challenges, from turning off greenhouse gas emissions immediately (not likely to happen) to sticking ones head in the tar sands and increasing the emission rates as much as possible. A part of the debate now revolves around whether or not the most restrictive pathway can be combined with continued economic growth, but more about that below.The IPCC's fifth report from November 1 2014 comes with its usual summary for policymakers. However, having a policy is not restricted to the assumed readers of the report; we are all policymakers. IPCC's report sketches the facts (the melting ice, acidifying of oceans etc) and suggest mitigation strategies and adaptation to the inevitable worsening climate in many parts of the world. Perhaps the physics behind global warming is assumed to be known, but possible positive feedback mechanisms are worth mentioning. This is how the summary alludes to that:

It is virtually certain that near-surface permafrost extent at high northern latitudes will be reduced as global mean surface temperature increases, with the area of permafrost near the surface (upper 3.5 m) projected to decrease by 37% (RCP2.6) to 81% (RCP8.5) for the multi-model average (medium confidence).Melting ice means that the albedo will decrease so that a darker sea surface will absorb more energy. Methane may also be released, either gradually or in a sudden burp, as permafrost thaws. For the record, methane has a global warming potential many times that of CO2 (the factor depends on the time horizon which is why different figures may be used).

As usual, the so called policy makers are reluctant of taking consorted action. From the debate it may appear as though the goal of limiting global warming to 2°C would be sufficient to keep up business as usual. Oil companies and their allied business partners either invest in campaigns casting doubt on climate science, or argue that their production is so much cleaner than in the rest of the world, and if they didn't drill for oil, then someone else would. Yet, we know that most of the known hydrocarbon sources will have to remain in the ground to minimize the impact of climate change. Even with one of the most optimistic scenarios, we would have to prepare for extreme weather; draughts, hurricanes, forest fires, flooding, and mass extinction of species. Perhaps there is an awareness of the unwillingness to take prompt measures to reduce greenhouse gas emissions that has tipped the IPCC's focus somewhat over on adaptation to shifting climate conditions rather than focusing narrowly on mitigation strategies.

Global Strategic Trends: a broader perspective

With this gloomy outlook in mind, one should not forget that there is a fast-paced development in science and technology in areas from solar panels to medicine and artificial intelligence. The British Ministry of Defence's think tank Development, Concepts and Doctrine Centre has compiled a report that tries to summarize trends in several areas in a thirty year perspective up to 2045. Their Global Strategic Trends (GST) report does not make predictions with assertions of the likelihood of various outcomes, but rather illustrates where some of the current trends may lead, as well as providing alternative scenarios. The strength of this Global Strategic Trends report is its multifaceted view. Politics, climate, population dynamics, scientific and technological development, security issues, religion and economics are all at some level interrelated so that significant events in one domain has implications in other domains. Such cross-disciplinary interactions may be difficult to speculate about, but the GST report at least brings all these perspectives into a single pdf, with some emphasis on military strategies and defence. A weakness of the GST report is that it is perhaps too fragmented, and the most important challenges are almost lost in all the detail.Peak oil, the debt economy and climate change

A better and more succinct summary of these great challenges might be one of Richard Heinberg's lectures. As Heinberg neatly explains, the exceptional economic growth witnessed the last 150 years is the result of the oil industry. In the first years, oil was relatively easy to find and to produce. Gradually, the best oil fields have been depleted and only resources of lesser quality remain such as the recently discovered shale oil across the UK. The production of unconventional oil is much less efficient than that of standard crude oil. The question is, how much energy goes into producing energy, or what is the Energy Return On Energy Invested (EROEI)? As this ratio goes down to 1, the energy produced is equal to that invested in producing it. Although exact figures are hard to estimate, it is clear that the EROEI of unconventional oil is significantly lower than that of conventional oil; its EROEI is so low in fact that even in strictly economic terms it is scarcely worth producing, leaving aside its potentially devastating effects on the environment.Heinberg discusses three issues which combined seem to have very dramatic consequences for the civilised world as we know it: First, there is the energy issue and peak oil. It appears unlikely that renewable energy sources and nuclear power will be able to replace fossil fuel at the pace that would be needed for continuing economic growth. Second, there is debt as the driver of economic growth. As an illustration, Heinberg mentions the early automobile industry with its efficient, oil-powered assembly line production, so efficient in fact that it led to over-production. The next problem became how to sell cars to customers, because these were more expensive than people could generally afford. Hence came the invention of advertising, of "talking people into wanting things they didn't need" and subsidiary strategies such as planned obsolescence; making products that broke down and had to be replaced, or the constant redesign and changing fashion so that consumers would want to have the latest model. The solution to the cars being too expensive was to offer consumers credit. Money is created every time someone gets a loan from the bank with the trust that it will be paid back in the future, which again necessitates economic growth. This system has sometimes been likened to a pyramid game, and it is not a very radical idea to believe that it could collapse at some point. The third issue that Heinberg brings up is the climate change, which will lead to global disruption as large parts of the world become uninhabitable.

Two of Heinberg's recent essays deal with the energy situation and the coming transition to a society with a very different pattern of energy consumption. His criticizes the excessive optimism on behalf of renewable energy on one hand and "collapsitarians" on the other, some of which even think we are bound for extinction. The conclusion is that energy use and consumption in general must be reduced, either voluntarily or as a matter of necessity when there are no longer any alternatives.

An even more vivid account of more or less the same story is given by Michael C. Ruppert in the documentary Collapse. And after the realisation that this is probably where we are heading, it may be best to take the advice of Dmitry Orlov who has some experience of living in a collapsed Soviet Union: just stay calm, be prepared to fix anything that breaks down yourself and don't expect any more holiday trips to the other side of the planet!

Monday, August 25, 2014

Reptilian Revolution

Almost unquantized music.

Q: Is this a concept album?

A: Yes. Its subject matter is not only derived from those entertaining kooks who see shapeshifting reptilians on u-tube videos with their very own eyes, hence they must exist; there are also references to more serious topics such as the unhealthy state of affairs alluded to in this previous post.

Monday, May 12, 2014

Programming for algorithmic composition

Computer programming is an essential prerequisite for musical composition. Imagine if that were the case. Of course it is not, any more than composition is necessary for programming. However, in algorithmic composition you do not get very far without recourse to computer programming, and for composers to learn programming, algorithmic composition is the best way to get started.

Writing a program that outputs a specific composition is very different from programming for the general needs of some other user, which is what professional programmers do. Since no assumptions about the interaction with some unknown user have to be made, it should be much easier. Programming in algorithmic composition is nothing other than a particular way of composing, a method that inserts a layer of formalisation between the composer and the music. Algorithmic composition is a wonderful opportunity to investigate ideas, to learn about models and simulations, to map data to sound.

Generative music, which is not necessarily synonymous with algorithmic composition, is often concerned with making long pieces or a set of pieces that may be created on demand. The forthcoming album Signals & Systems is filled to the brim with algorithmic compositions, and the source code for a program that outputs variants on one of the pieces is now available.

Wednesday, February 26, 2014

Manifesto for self-generating patches

Ideas for the implementation of autonomous instruments in analog modular synths (v. 0.2)

The following guidelines are not meant as aesthetic value judgements or prescriptions as to what people should do with their modulars — as always, do what you want! The purpose is to propose some principles for the exploration of a limited class of patches and a particular mode of using the modular as an instrument.

Self-generating patches are those which, when left running without manual interference, produce complex and varied musical patterns. Usually, the results will be more or less unpredictable. In this class of patches, there are no limitations as to what modules to use and how to connect them, except that one should not change the patch or touch any knobs after the patch has been set up to run. An initial phase of testing and tweaking is of course allowed, but if preparing a recording as documentation of the self-generating patch, it should just run uninterrupted on its own.

A stricter version of the same concept is to try to make a deterministic autonomous system in which there is no source of modulation (such as LFOs or sequencers) that is not itself modulated by other sources. In consequence, the patch has to be a feedback system.

The patch may be regarded as a network with modules as the nodes and patch cords as the links. Specifically, it is a bidirectional graph, because modules usually have both inputs and outputs. (The requirement that there be no source of modulation which itself is not modulated by other modules implies that, e.g., noise modules or LFOs without any input are not allowed.) Thus, in the graph corresponding to the patch, each node that belongs to the graph must have at least one incomming link and at least one outgoing link. The entire patch must be interconnected in the sense that one can follow the patch cords from any module through intervening modules to any other module that belongs to the patch.

Criterion of elegance:

The smaller the number of modules and patch cords used, the more elegant the patch is. (Caveat: modules are not straightforwardly comparable. There are small and simple modules with restricted possibilities, and modules with lots of features that may correspond to using several simpler modules.)

Aesthetic judgement:

Why not organize competitions where the audience may vote for their favourite patches, or perhaps let a panel of experts decide.

Standards of documentation:

Make a high quality audio recording with no post processing other than possibly volume adjustment. Video recordings and/or photos of the patch are welcome, but a detailed diagram explaining the patch and settings of all knobs and switches involved should be submitted. The diagram should provide all the information necessary to reconstruct the patch.

Criterion of robustness:

Try to reconstruct the patch with some modules replaced by equivalent ones. Swap one oscillator for another one, use a different filter or VCA and try to get a similar sound. Also try small adjustments of knobs and see whether it affects the sound in a radical way. The more robust a patch is, the easier it should be for other modular enthusiasts to recreate a similar patch on their system.

Criteria of objective complexity:

The patch is supposed to generate complex, evolving sounds, not just a static drone or a steady noise. Define your own musical complexity signal descriptor and apply it to the signal. Or use one of the existing complexity measures.

Dissemination:

Spread your results and let us know about your amazing patch!

The following guidelines are not meant as aesthetic value judgements or prescriptions as to what people should do with their modulars — as always, do what you want! The purpose is to propose some principles for the exploration of a limited class of patches and a particular mode of using the modular as an instrument.

Self-generating patches are those which, when left running without manual interference, produce complex and varied musical patterns. Usually, the results will be more or less unpredictable. In this class of patches, there are no limitations as to what modules to use and how to connect them, except that one should not change the patch or touch any knobs after the patch has been set up to run. An initial phase of testing and tweaking is of course allowed, but if preparing a recording as documentation of the self-generating patch, it should just run uninterrupted on its own.

A stricter version of the same concept is to try to make a deterministic autonomous system in which there is no source of modulation (such as LFOs or sequencers) that is not itself modulated by other sources. In consequence, the patch has to be a feedback system.

The patch may be regarded as a network with modules as the nodes and patch cords as the links. Specifically, it is a bidirectional graph, because modules usually have both inputs and outputs. (The requirement that there be no source of modulation which itself is not modulated by other modules implies that, e.g., noise modules or LFOs without any input are not allowed.) Thus, in the graph corresponding to the patch, each node that belongs to the graph must have at least one incomming link and at least one outgoing link. The entire patch must be interconnected in the sense that one can follow the patch cords from any module through intervening modules to any other module that belongs to the patch.

Criterion of elegance:

The smaller the number of modules and patch cords used, the more elegant the patch is. (Caveat: modules are not straightforwardly comparable. There are small and simple modules with restricted possibilities, and modules with lots of features that may correspond to using several simpler modules.)

Aesthetic judgement:

Why not organize competitions where the audience may vote for their favourite patches, or perhaps let a panel of experts decide.

Standards of documentation:

Make a high quality audio recording with no post processing other than possibly volume adjustment. Video recordings and/or photos of the patch are welcome, but a detailed diagram explaining the patch and settings of all knobs and switches involved should be submitted. The diagram should provide all the information necessary to reconstruct the patch.

Criterion of robustness:

Try to reconstruct the patch with some modules replaced by equivalent ones. Swap one oscillator for another one, use a different filter or VCA and try to get a similar sound. Also try small adjustments of knobs and see whether it affects the sound in a radical way. The more robust a patch is, the easier it should be for other modular enthusiasts to recreate a similar patch on their system.

Criteria of objective complexity:

The patch is supposed to generate complex, evolving sounds, not just a static drone or a steady noise. Define your own musical complexity signal descriptor and apply it to the signal. Or use one of the existing complexity measures.

Dissemination:

Spread your results and let us know about your amazing patch!

Tuesday, February 11, 2014

The geometry of drifting apart

Why do point particles drift apart when they are randomly shuffled around? Of course the particles may be restricted by walls that they keep bumping into, or there may be some attractive force that makes them stick together, but let us assume that there are no such restrictions. The points move freely in a plane, only subject to the unpredictable force of a push in a random direction.

Suppose the point xn (at discrete time n) is perturbed by some stochastic vector ξ, defined in polar coordinates (r, α) with uniform density functions f, such that

fr(ξr) = 1/R, 0 ≤ ξr ≤ R

fα(ξα) = 1/2π, 0 ≤ ξα < 2π.

Thus, xn+1 = xn + ξ, and the point may move to any other point within a circle centered around it and with radius R.

Now, suppose there is a point p which can move to any point inside a circle P in one step of time, and a point q that can move to any point within a circle Q.

First, suppose the point p remains at its position and the point q moves according to the probability density function. For the distance ||p-q|| to remain unchanged, q has to move to some point on the blue arc that marks points equidistant from p. As can be easily seen, the blue arc divides the circle Q in two unequal parts with the smallest part being closest to p. Therefore, the probability of q moving away from p is greater than the probability of approaching p. As the distance ||p-q|| increases, the arc through q obviously becomes flatter, thereby dividing Q more equally. In consequence, when p and q are close, they will be likely to move away from each other at a faster average rate than when they are farther apart, but they will always continue to drift apart.

After q has moved, the same reasoning can be applied to p. Furthermore, the same geometric argument works with several other probability density functions as well.

After q has moved, the same reasoning can be applied to p. Furthermore, the same geometric argument works with several other probability density functions as well.

When a single point is repeatedly pushed around, it traces out a path and the result is a Brownian motion resulting in skeins such as this one.

Different probability density functions may produce tangles with other visual characteristics. The stochastic displacement vector itself may be Brownian noise, in which case the path is more likely to travel in more or less the same direction for several steps of time. Then two nearby points will separate even faster.

Monday, January 13, 2014

Who cares if they listen?

No, it wasn't Milton Babbitt who coined the title of that notorious essay, but it stuck. And, by the way, it was "Who cares if you listen?". Serialism, as Babbitt alludes to, employs a tonal vocabulary "more efficient" than that of past tonal music. Each note-event is precisely determined by its pitch class, register, dynamic, duration and timbre. In that sense, the music has a higher information density and the slightest deviation from the prescribed values is structurally different, not just an expressive coloration. Such music inevitably poses high demands on the performer, as well as on the listener. (Critical remarks could be inserted here, but I'm not going to.) Similarly to how recent advances in mathematics or physics can be understood only by a handful of specialists, there is an advanced music that we should not expect to be immediately accessible to everyone. For such an elitist endeavor to have a chance of survival at all, Babbitt suggests that research in musical composition should take its refuge in universities. Indeed, in 20th century America that was where serialist composers were to be found.

The recent emergence of artistic research at universities and academies is really not much different from what Babbitt was pleading for. Artistic research is awkwardly situated between theorizing and plain artistic practice as it used to be before everyone had to write long manifestos. But more about that on another occasion, perhaps.

In a more dadaist spirit, there is this new release out now on bandcamp, also titled Who cares if they listen.

In these days there are enough reasons to care if they listen (yes they do, but not wittingly) and to worry about its consequences, as discussed in a previous post.

Friday, November 1, 2013

The theoretical minimum of physics

The theoretical minimum.

What you need to know to start doing physics

by Susskind and Hrabovsky, 2013

This crash course of classical mechanics is targeted at those who “regretted not taking physics at university” or who perhaps did but have forgotten most of it, or anyone who is just curious and wants to learn how to think like a physicist. Since first year university physics courses usually have rather high drop out frequencies, there must be some genuine difficulties to come over. Instead of dwelling on the mind-boggling paradoxes of quantum mechanics and relativity as most popular physics books do, and wrapping it up in fluffy metaphores and allusions to eastern philosophy, the theoretical minimum offers a glimpse of the actual calculations and their theoretical underpinnings in classical mechanics.

This two hundred page book grew out of a series of lectures given by Susskind, but adds a series of mathematical interludes that serve as refreshers on calculus. Although covering almost exactly the same material as the book, the lectures are a good complement. Some explanations may be more clear in the classroom, often prompted by questions from the audience. Although Susskind is accompanied by Hrabovsky as a second author, the text mysteriously addresses the reader in the first person singular.

A typical first semester physics text book may cover less theory than the theoretical minimum in a thousand pages volume, although it would probably cover relativity theory which is not discussed in this book. There are a few well chosen exercises in the theoretical minimum, some quite easy and a few that take some time to solve. “You can be dumb as hell and still solve the problem”, as Susskind puts it in one of the lectures while discussing the Lagrangian formulation of mechanics versus Newton's equations. That quote fits as a description of the exercises too, as many of them can be solved without really gaining a solid understanding of how it all works.